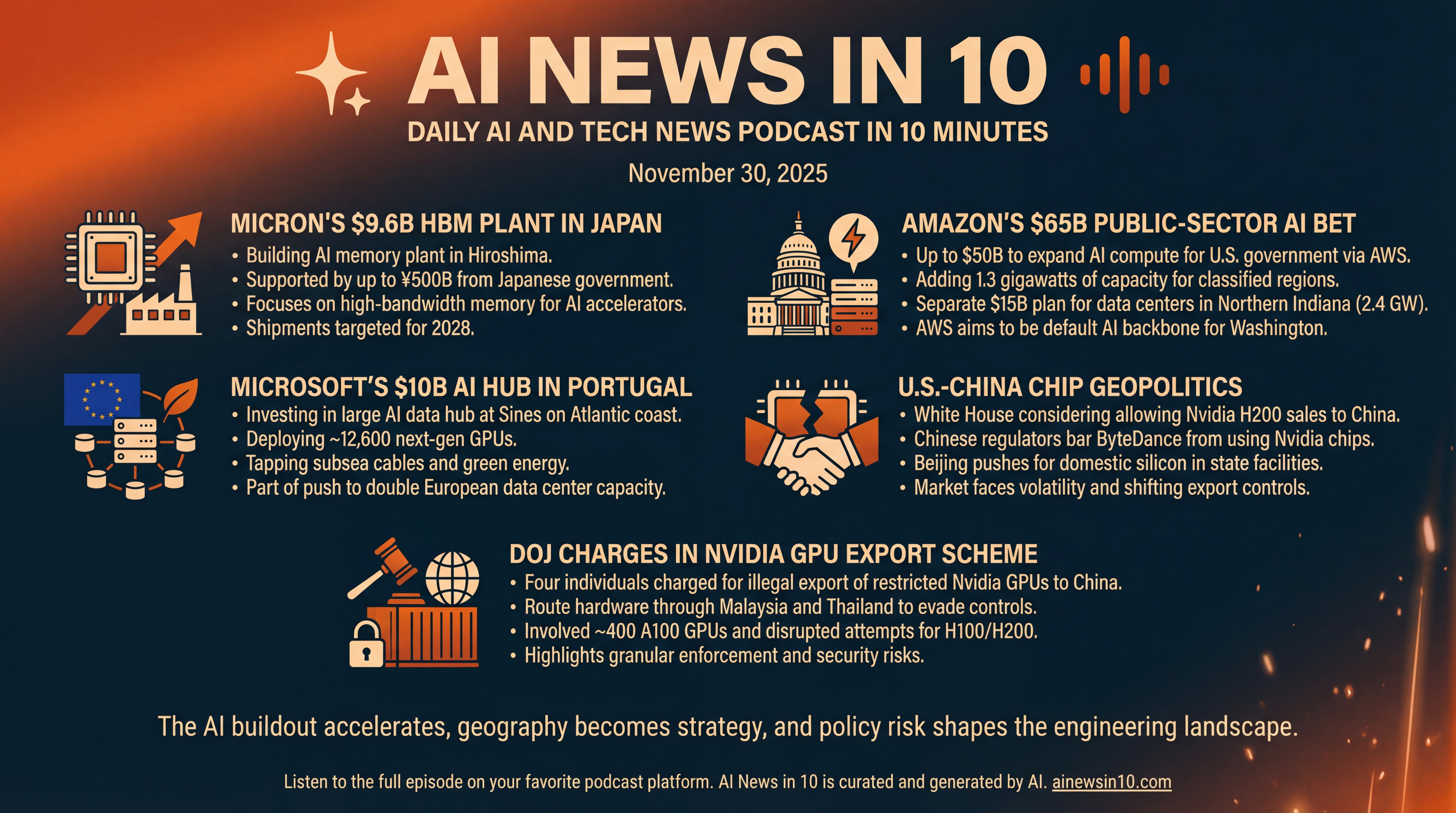

Chips, Gigawatts, and Geopolitics Collide

AI infrastructure surges across Japan, the U.S., and Europe as Micron, Amazon, and Microsoft scale up — while policy shifts and enforcement reshape the chip landscape. Inside: HBM in Hiroshima, AWS’s government buildout, Microsoft’s Sines hub, an H200 export debate, and a DOJ case targeting illicit GPU shipments.

Episode Infographic

Show Notes

Welcome to AI News in 10, your top AI and tech news podcast in about 10 minutes. AI tech is amazing and is changing the world fast, for example this entire podcast is curated and generated by AI using my and my kids cloned voices...

Here’s what’s new today... Sunday, November 30, 2025. The AI chip buildout is accelerating across three continents. Amazon and Microsoft are unveiling multibillion-dollar infrastructure bets, Micron is choosing Hiroshima for next-generation memory, and geopolitics is slicing right through the semiconductor stack. We’ll also dig into Washington’s latest export-control twist, and a Justice Department case alleging that hundreds of restricted Nvidia GPUs slipped into China. Let’s dive in...

[BEGINNING_SPONSORS]

Story one... Micron is going big on high-bandwidth memory in Japan. The company plans to invest about 1.5 trillion yen — roughly 9.6 billion dollars — to build a new AI memory plant in Hiroshima, focused on advanced HBM that feeds the world’s AI accelerators. Japan’s Ministry of Economy, Trade and Industry is prepared to back the project with up to 500 billion yen in support. Construction is set to begin next May at an existing site, with shipments targeted around 2028.

Why it matters... HBM has become the oxygen of large AI clusters, and supply is tight. This expansion positions Micron to challenge SK hynix and others — while diversifying manufacturing away from Taiwan, a strategic priority for companies and governments. It also fits Tokyo’s broader effort to rebuild domestic chip capacity, alongside projects tied to TSMC and IBM. That’s a lot of capacity — and a lot of subsidies — chasing AI demand. Reported Saturday, November 29, by Reuters.

Let’s move to North America for story two. Amazon is turning the dial to eleven on public-sector AI compute. The company has committed up to 50 billion dollars to expand artificial intelligence and supercomputing for U.S. government customers via AWS — adding around 1.3 gigawatts of high-performance capacity across AWS’s classified regions: Top Secret, Secret, and GovCloud — with construction starting in 2026. On the same day, Amazon also unveiled a separate 15 billion dollar plan to grow data center campuses in Northern Indiana — adding 2.4 gigawatts and about 1,100 jobs.

The takeaway... AI isn’t just a commercial cloud story anymore — it’s a national capacity story. Agencies will get access to services like SageMaker, Bedrock, and partner models such as Anthropic’s Claude, inside hardened government regions. And stateside energy markets will be asked to support another multi-gigawatt wave of demand. Google and Oracle are pursuing similar public-sector wins, but Amazon’s message is clear — AWS intends to be the default AI backbone for Washington. Reported November 24 by Reuters.

Story three... Microsoft is planting a 10 billion dollar AI flag in Europe. The company will invest in a large AI data hub at Sines, on Portugal’s Atlantic coast — working with developer Start Campus, UK-based Nscale, and Nvidia. The plan calls for deploying about 12,600 next-gen GPUs and tapping Sines’ subsea cable nexus and green energy potential. It’s one of Europe’s largest AI infrastructure investments — and part of Microsoft’s push to double data center capacity across 16 European countries by 2027.

Strategically... Microsoft gets a landing point near the Mediterranean that stitches into transatlantic cables and can scale power and cooling — while giving the EU another sovereign-friendly compute anchor as it wrestles with AI rules and industrial policy. Microsoft’s Brad Smith framed Sines as a benchmark for the responsible and scalable development of AI in Europe. Reported mid-November by Reuters and Bloomberg.

[MIDPOINT_SPONSORS]

Story four takes us to the U.S.–China policy fault line. The White House is considering whether to allow Nvidia’s H200 AI chips to be sold into China. Commerce Secretary Howard Lutnick told Bloomberg the decision sits on President Trump’s desk — a potential softening after years of tightening export controls. The H200, with its larger high-bandwidth memory footprint, is a meaningful leap for data-crunching models. Nvidia, for its part, says there are no talks to sell its newest Blackwell chips into China.

At the same time, Beijing appears to be moving in the opposite direction. Chinese regulators have barred ByteDance from using Nvidia chips in new data centers — a striking sign of efforts to decouple and push domestic silicon into state-backed facilities. Whatever the final call from Washington, the message to the market is volatility... customers in China face shifting ceilings, Nvidia faces whipsaw demand and product tailoring, and developers everywhere are planning for a world where supply — not just specs — determines capability. Reported last week by Reuters, drawing on Bloomberg and The Information.

And story five... enforcement is heating up. The U.S. Department of Justice has charged four individuals — two U.S. citizens and two Chinese nationals — in an alleged scheme to illegally export restricted Nvidia GPUs to China. Prosecutors say the group routed hardware through Malaysia and Thailand to evade controls, with two completed shipments moving about 400 A100 GPUs between late 2024 and early 2025. Authorities say additional attempts involving H100-equipped HPE supercomputers and 50 H200 chips were disrupted — and cite roughly 3.89 million dollars in wire transfers tied to the operation.

The case crystallizes how export controls now extend into the gray zones of reseller networks and front companies. It will also feed Congressional pressure for tighter chip-security legislation — while chipmakers warn that blunt-force kill switches or backdoors could create fresh cybersecurity risks. Coverage over the past week via Ars Technica and Cybernews.

Stepping back... what’s the signal here? First, the AI buildout isn’t slowing — Micron adds HBM capacity, and Amazon and Microsoft add gigawatts. Second, geography is strategy — Hiroshima, Indiana, and Sines are as much about energy, water, and cables as they are about chips. Third, policy risk is now an engineering constraint — Washington may open or close access to specific Nvidia parts, and Beijing is pushing giants like ByteDance off U.S. silicon. And finally, enforcement is getting more granular... going after shipments, intermediaries, and even SKU variants.

That’s it for today’s rundown... Micron’s 9.6 billion dollar HBM bet in Japan; Amazon’s up to 50 billion dollar government AI build and its 15 billion dollar Indiana expansion; Microsoft’s 10 billion dollar Portugal push; a Washington-and-Beijing chip chessboard featuring H200s and a ByteDance clampdown; and a DOJ case that shows just how hard it is to bottle up GPUs in a global market. We’ll see you tomorrow — with the next wave of launches, labs, and laws reshaping the AI economy.

Thanks for listening and a quick disclaimer, this podcast was generated and curated by AI using my and my kids' cloned voices, if you want to know how I do it or want to do something similar, reach out to me at emad at ai news in 10 dot com that's ai news in one zero dot com. See you all tomorrow.