Code Red, Optical Highways, and Memory Squeeze

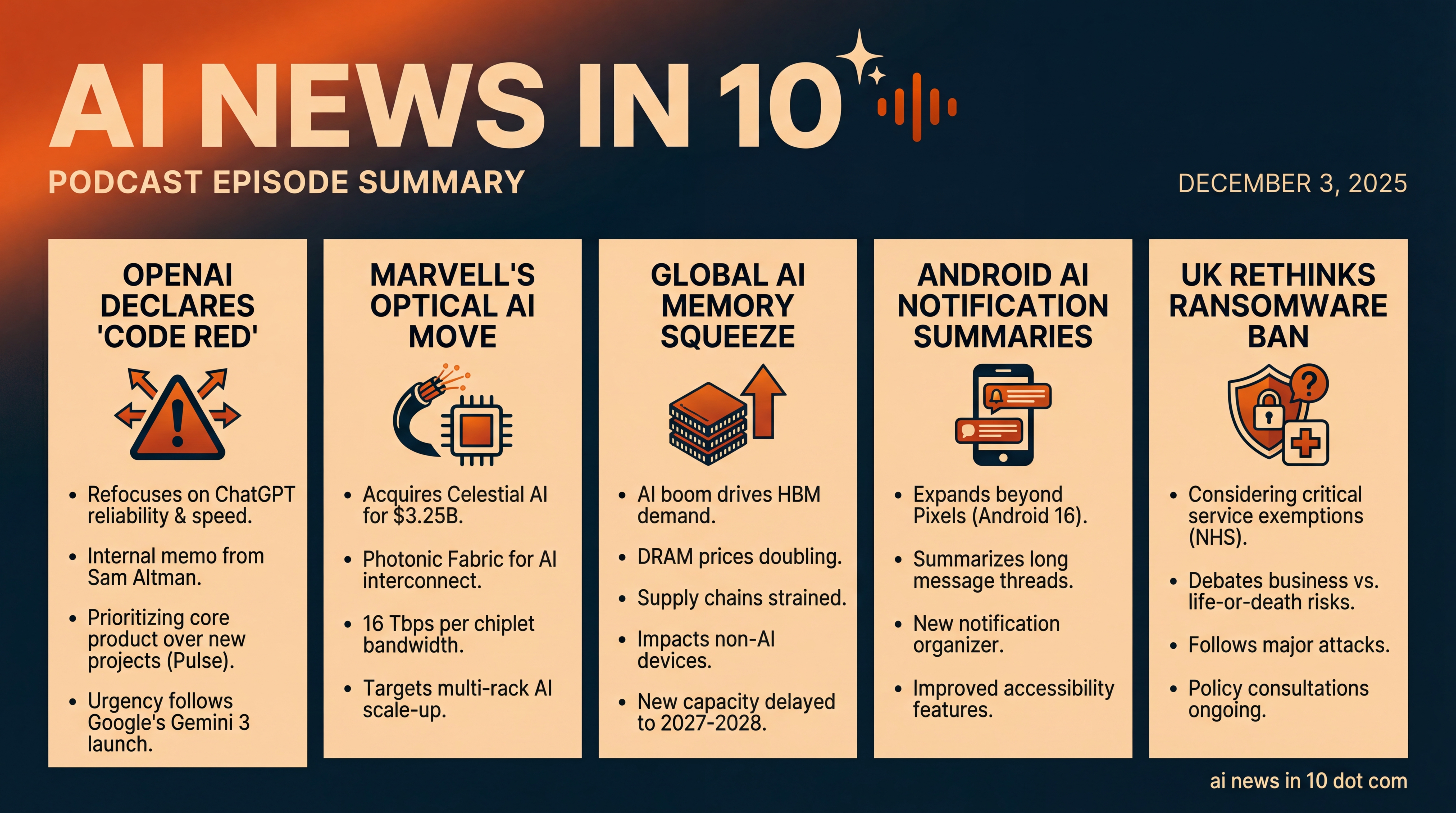

OpenAI refocuses ChatGPT as Google’s Gemini 3 raises the bar, while Marvell bets big on optical interconnects and the AI boom tightens the global memory market. Plus, Android’s AI notification summaries expand and the UK weighs a ransomware payment ban for critical services.

Episode Infographic

Show Notes

Welcome to AI News in 10, your top AI and tech news podcast in about 10 minutes. AI tech is amazing and is changing the world fast, for example this entire podcast is curated and generated by AI using my and my kids cloned voices...

Here’s what’s making waves today, Wednesday, December 3rd, 2025... OpenAI hits the panic button to refocus ChatGPT as Google’s Gemini 3 turns up the heat. Marvell makes a multibillion-dollar move in optical AI networking with a Celestial AI acquisition. The AI boom is squeezing global memory supply chains and pushing prices higher. Google is rolling out AI-powered notification summaries to more Android phones. And the UK is debating how far to go with a potential ban on ransomware payments — especially when hospitals are at risk.

Let’s dive in.

[BEGINNING_SPONSORS]

Story one — OpenAI has declared a code red. According to multiple reports, CEO Sam Altman sent an internal memo on December 1 directing the company to prioritize improving ChatGPT’s everyday experience — speed, reliability, personalization — and to delay other initiatives like advertising, shopping agents, and a personal assistant project reportedly called Pulse.

The urgency follows Google’s launch of Gemini 3, which has posted strong benchmark results and lured some high-profile users. OpenAI’s move is about focus — shoring up the core product that millions rely on every week.

There’s also a chip-side subplot to watch. Nvidia’s CFO said yesterday the company has not finalized its proposed $100 billion systems deal with OpenAI, clarifying that none of the potential chips for that arrangement are counted in Nvidia’s existing backlog. It’s a reminder that even the biggest AI partnerships are complex — and not fully baked until they’re signed.

Meanwhile, some analysts framed Altman’s panic button as a moment that demands tangible reprioritization, not just rhetoric. Translation: users should see noticeable improvements in ChatGPT soon... if the pivot is working.

Why it matters... The competitive bar keeps rising, and the economics are brutal. OpenAI’s user scale and valuation haven’t yet translated into durable profitability, while operating costs — and the infrastructure needed to keep pushing model quality — are enormous. The code red is an admission that product polish and reliability matter as much as model breakthroughs in a market where switching costs for users keep shrinking.

Story two — Marvell goes optical. The chipmaker announced a $3.25 billion cash-and-stock acquisition of Celestial AI, a startup known for its Photonic Fabric — a co-packaged optical interconnect designed to move data with light rather than electrical signals inside and between AI systems.

Marvell says Celestial’s first-gen chiplet delivers a staggering 16 terabits per second of bandwidth in a single package, aimed squarely at next-generation multi-rack AI scale-up fabrics. The deal is expected to close in early 2026, with revenue contributions beginning in the second half of fiscal 2028 and a targeted $1 billion annualized run rate by late fiscal 2029.

Investors liked it — shares jumped on the news, and the company highlighted an Amazon warrant tied to future photonics purchases through 2030. That’s an endorsement, and a signal of hyperscaler interest in optical scale-up networks.

Marvell frames this as transformational, expanding from traditional scale-out Ethernet into the higher-bandwidth, lower-latency domain needed to bind hundreds of accelerators into a single logical compute pool. It’s also a direct answer to the rising interconnect bottleneck in AI training clusters.

The strategic read... As AI clusters sprawl beyond a single rack, copper reaches its limits. Optical chiplets and co-packaged optics feed the beast without torching power budgets. If Marvell can execute, Celestial AI gives it a differentiated seat at the table alongside Nvidia and Broadcom for the interconnect layer of AI data centers.

Story three — The AI boom is colliding with the memory market, hard. A global supply squeeze is emerging as demand for DRAM — and especially high-bandwidth memory — surges. Prices have doubled in some cases, with inventories at rock-bottom levels.

Chipmakers are prioritizing high-margin HBM for AI workloads, leaving fewer conventional memory parts for smartphones and PCs. Some manufacturers and retailers are rationing memory products in Asia, and handset makers warn of price hikes. The kicker: capacity expansions for the newest HBM nodes won’t fully arrive until 2027–2028. That’s a long wait in AI years.

Why it matters for builders and buyers... If you’re planning AI infrastructure, the memory line item could be your biggest surprise. For consumers, those component costs ripple outward — and could drag on device margins. For now, only the most resilient players can comfortably outbid rivals for scarce HBM.

[MIDPOINT_SPONSORS]

Story four — Google is bringing AI notification summaries to more Android phones. The feature first seen on Pixels compresses long, chaotic message threads into bite-sized updates you can parse at a glance.

With Android 16, Google is expanding access to non-Pixel devices from partners like Samsung, along with a new notification organizer to quietly corral low-priority alerts. Accessibility gains are part of the package too, with broader Expressive Captions support and improvements to TalkBack and Voice Access. It’s a small but meaningful quality-of-life upgrade for anyone drowning in group chats.

Takeaway... In a world where AI assistants compete on convenience, every friction cut counts. Summaries don’t require a flashy model demo — they meet people where they already live: notifications. If adoption is wide and latency is low, this is the kind of sticky feature that keeps users inside Android’s default experiences.

Story five — The UK is rethinking a hard line on ransomware. Ministers have floated a ban on ransom payments to cybercriminals, but they’re now considering national security exemptions for critical services like the NHS after a spate of disruptive attacks — including one that severely affected hospital operations and was cited as possibly contributing to a patient death.

Policymakers are caught between two tough realities: bans can remove the criminals’ business case, but in a life-or-death service outage, the pressure to pay can be overwhelming. Consultations with industry and agencies are ongoing before the policy is finalized.

What it means... 2025’s surge in supply-chain hacks and data theft shows how intertwined systems have become. Even well-secured platforms can be exposed via connected apps and vendors — see the recent Salesforce–Gainsight incident affecting hundreds of organizations as a case study in third-party risk. The UK debate underscores that cyber policy now has to account for cascading, real-world harms — not just technical best practices.

Quick recap... OpenAI’s code red is a wager that product polish beats model FOMO. Marvell’s Celestial AI buy bets big on optical highways for AI clusters. The global memory crunch could keep hardware costs elevated into 2027. Android’s AI summaries aim to tame notification overload. And the UK is threading a policy needle on ransomware to protect critical services.

We’ll keep watching how these stories evolve — and how they shape the tools you use and build next.

Thanks for listening and a quick disclaimer, this podcast was generated and curated by AI using my and my kids' cloned voices, if you want to know how I do it or want to do something similar, reach out to me at emad at ai news in 10 dot com that's ai news in one zero dot com. See you all tomorrow.