Funding Frenzy, Faster Models, Real Costs

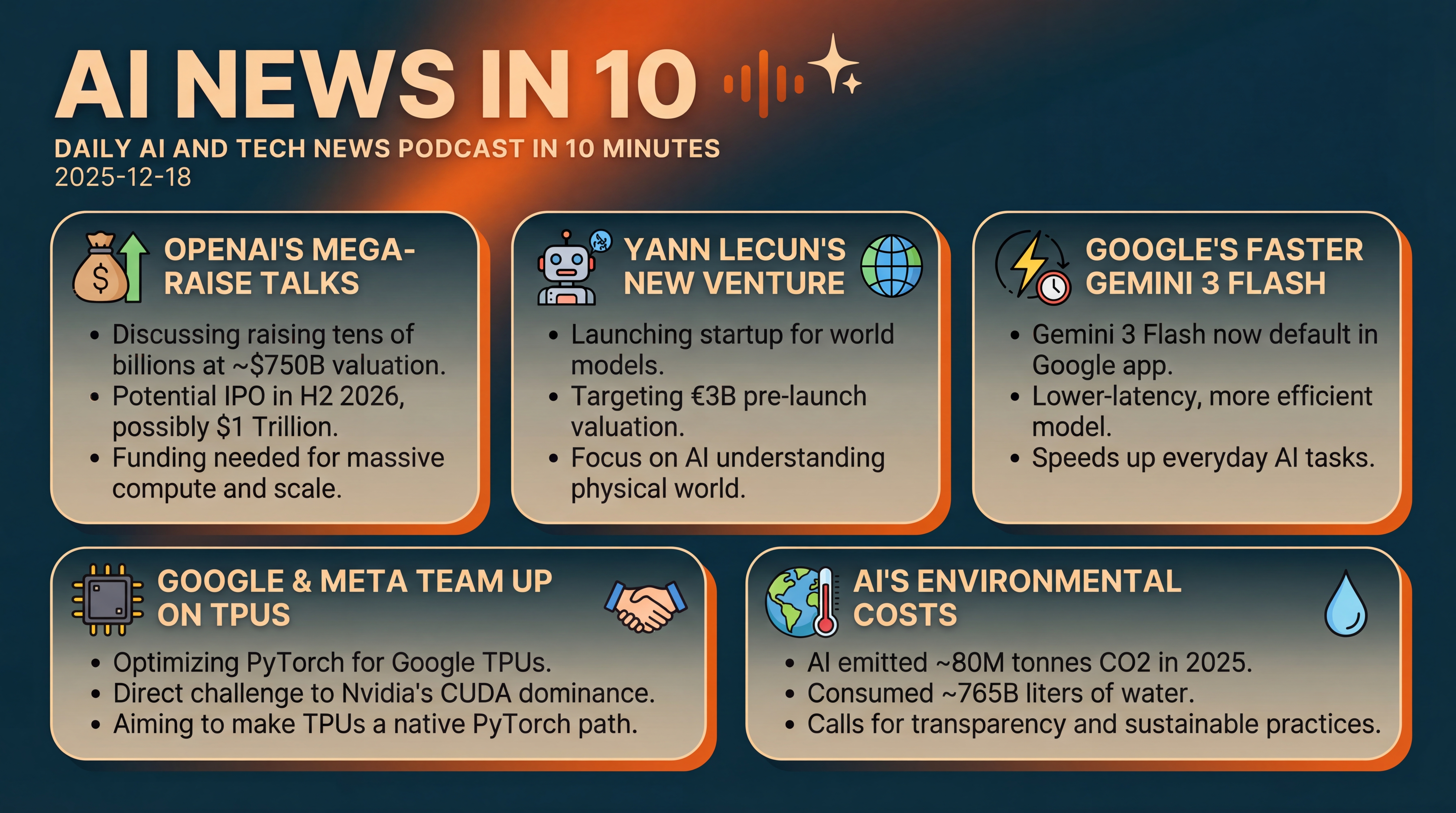

OpenAI eyes a massive raise, Yann LeCun spins up a world models startup, and Google makes Gemini 3 Flash the snappy default. Plus, Google and Meta push PyTorch onto TPUs as new research lays bare AI’s energy and water footprint.

Episode Infographic

Show Notes

Welcome to AI News in 10, your top AI and tech news podcast in about 10 minutes. AI tech is amazing and is changing the world fast, for example this entire podcast is curated and generated by AI using my and my kids cloned voices...

Here’s what’s on deck today... OpenAI is reportedly lining up a huge new funding round at a sky-high valuation. Meta’s longtime AI chief, Yann LeCun, is spinning up a startup with a multibillion-euro target. Google is flipping the switch on a faster Gemini 3 Flash model as the default in its app. Google and Meta are teaming up to make PyTorch hum on TPUs — a direct shot at Nvidia’s software dominance. And a new study tallies the year’s AI energy, carbon, and water tab... and it’s a wake-up call. Let’s get into it.

[BEGINNING_SPONSORS]

Story one: OpenAI’s valuation ambitions are back in the headlines... and the numbers are getting even bigger. Reports say the company is discussing raising tens of billions of dollars at around a seven hundred fifty billion dollar valuation. That would be a sharp jump from roughly five hundred billion attached to a large secondary sale in October, where current and former employees sold shares.

The chatter even includes a potential IPO as early as the second half of 2026 — with some scenarios imagining a trillion dollar debut. To be clear, these are early discussions, not guarantees.

Why it matters... scale. Training long-horizon, reasoning-heavy models — and deploying them across consumer apps, enterprise tools, and agents — requires staggering compute and long-term power contracts. OpenAI already has multibillion-dollar partnerships, and more capital would let it pre-buy capacity, expand data pipelines, and keep pace with rivals racing up the same hill.

Investors are weighing two competing realities: the urge to own the category leader during an AI land grab... and lingering questions about when these bets turn into durable profits beyond cloud-driven usage spikes.

Here’s one quick lens. If you buy the idea that whoever controls compute controls progress, then cornering long-term access to GPUs, TPUs, and energy becomes a strategy in itself. The valuation may raise eyebrows — but it also reflects the belief that state-of-the-art models can turn into everyday assistants, office copilots, and specialized agents people actually use, daily and at scale.

Story two: a new player is entering the arena — well, a very familiar name with a new badge. Yann LeCun, Meta’s outgoing Chief AI Scientist and a 2018 Turing Award winner, is launching a startup reportedly targeting a pre-launch valuation around three billion euros, raising about five hundred million to get it off the ground.

Alexandre LeBrun — best known for founding Nabla — is said to be the CEO, and the focus will be world models: representation learning that helps AI systems understand and interact with the physical world across robotics, autonomy, and other embodied tasks.

The timing is notable. LeCun has been a vocal proponent of open research, critical of closed-data, closed-weights approaches, and a skeptic of near-term AGI hype. Yet this venture is aiming at nothing less than superintelligent systems grounded in richer world understanding. That juxtaposition... is going to be fascinating to watch. And the fundraise — at multi-billion-euro, pre-product levels — shows that top-tier researchers with credible roadmaps can still command eye-popping valuations.

If you’re tracking what to look for next, watch for hiring signals, any open-source or open-weights commitments, and early research demos around self-supervised learning from video and interactions. If the thesis is truly world models, expect pretraining that blends simulated and real-world data, with agents learning to plan, predict, and transfer skills across tasks.

Story three: Google is speeding things up. Gemini 3 Flash — a lower-latency, more efficient model — is rolling out as the new default in the Gemini app and across Google’s AI surfaces. Flash builds on the reasoning and multimodal advances from Gemini 3 Pro, but it’s tuned for snappy responses and cost-effective scale.

Think faster, cheaper, still capable... the model you reach for when you need near-instant answers that can still parse text, images, and video. It’s arriving across the developer stack — AI Studio, the Gemini API, Antigravity, Android Studio, the Gemini CLI, and Vertex AI — so builders get a consistent, speedy baseline.

Google is positioning Flash as the go-to for everyday use, while Pro and deeper-thinking modes handle heavier reasoning or longer, multi-step tasks.

Why this matters... defaults drive behavior. If the default is materially faster, more concise, and good enough for most questions, people will lean on it more. And for developers and enterprises, a cheaper-to-serve default unlocks broader rollouts — customer support, internal knowledge bots, code helpers — without blowing the budget. Watch how quickly Flash shows up in Search’s AI mode, and how it changes usage of the higher-end tiers.

[MIDPOINT_SPONSORS]

Story four: a strategic shot at Nvidia’s biggest advantage — the software stack. Google is working to make its TPUs deeply compatible with PyTorch through an effort known internally as TorchTPU, and it’s doing so in close collaboration with Meta, the primary backer of PyTorch.

That’s a big deal. Today, most AI development happens in PyTorch, and the easiest, best-supported path to performance is Nvidia GPUs via CUDA. If PyTorch on TPUs can just work — with strong kernels, compilers, and tooling — the switching costs drop dramatically.

The incentive for Meta is obvious: reduce dependence on Nvidia and give itself — and the wider PyTorch ecosystem — another high-performance lane for training and inference. Google, for its part, has been expanding access to TPUs beyond its own cloud, even selling them directly for on-prem data centers as demand soars. It’s not just about chips... it’s about the developer experience. Make the default framework great on your hardware, and suddenly procurement and portability conversations look different.

Zooming out, Nvidia’s CUDA moat was built over years of painstaking software optimizations, libraries, and community support. The fastest way to chip away at that advantage is to meet developers where they are — PyTorch — and make an alternative path feel native. If Google and Meta deliver here, expect more teams to try TPU-based training runs, especially where availability or price tilts the math.

Story five: the environmental ledger for AI in 2025 is coming due — and it’s sobering. New research estimates that AI activity this year emitted up to eighty million tonnes of CO₂ — roughly comparable to the annual emissions of New York City — and consumed around seven hundred sixty-five billion liters of water.

The study is among the first to isolate AI’s footprint from broader data center usage. It paints a picture of hyperscale AI facilities — particularly in the U.S., China, and Europe — drawing power at aluminum-smelter scales, with electricity demand projected to more than double by 2030. In the U.K. alone, a hundred to two hundred such facilities are in development, with some single sites expected to emit more than a hundred eighty thousand tonnes of CO₂ per year.

The call to action is simple: greater transparency, better accounting, and real accountability from tech firms on the true resource costs of AI.

Why this hits now... the industry is simultaneously rolling out more capable models, incenting massive training runs, and racing to deploy agentic systems that are always on. That puts pressure on grids and water systems, intensifies siting debates, and forces trade-offs between performance and sustainability. Expect to hear more about efficiency gains — quantization, sparsity, smarter compilers — and about power purchase agreements, water recycling, and colocating compute with renewables. But the headline is clear: the status quo won’t scale cleanly.

Before we wrap, a quick connective thread across today’s stories. OpenAI’s capital hunt underscores the cost of chasing frontier capability. LeCun’s venture is a bet that richer world models will unlock the next era of robotics and embodied intelligence. Google’s Flash push shows that speed and efficiency are product features, not just infra details. And the Google–Meta PyTorch-on-TPU effort signals that the next competitive frontier is making alternative hardware feel native to the dominant developer workflow — all while the environmental bill is coming into focus, and getting steeper.

That’s the download: OpenAI’s mega-raise talks, LeCun’s new lab taking shape, Gemini 3 Flash becoming the faster default, Google and Meta working to erode Nvidia’s software moat, and a reminder that AI’s pace has real-world costs. We’ll keep tracking how the funding, the frameworks, and the footprints evolve... and what they mean for the tools you use every day.

Thanks for listening and a quick disclaimer, this podcast was generated and curated by AI using my and my kids' cloned voices, if you want to know how I do it or want to do something similar, reach out to me at emad at ai news in 10 dot com that's ai news in one zero dot com. See you all tomorrow.